Big Data After The Internet

Till 1995 most of the people did not know about the internet. It was hard to use, till the Netscape browser arrived and its famous IPO happened. The arrival of Netscape meant anyone could create material and anyone with a connection could view it.

Internet's popularity resulted in mushrooming of websites like AOL, MSN, Yahoo, CNN, Napster and so many more. They provided free information sharing services like emails, chats, photograph sharing, video sharing, blogging, news, weather, music, games etc. These sites were generating, collecting and sharing an enormous amount of data, for the people all over the globe. There were, of course, new generation e-commerce companies like Amazon and eBay that also contributed to the overall information available, but sharing of information was not at the core of their strategy.

Why this phenomenon of information sharing noteworthy? There are two good reasons:

Why is this important from Big Data point of view? The answer lies in the fact that Google created a unique system. This system could store the data from the entire visible Web, it had the ability to process that data and it could respond to user search queries in real time. While they did this, they made two things possible which were not seen earlier at such a large scale in any system:

Why this phenomenon of information sharing noteworthy? There are two good reasons:

- The data on the Internet was freely available to everyone on the Internet. It was no longer buried inside a database of a company or confined within its local network. The Internet was the new database.

- The data available was for human consumption. It was available in many forms. It was in free text like messages, chats emails, it was in blogs, it was in photographs, it was in videos, it was in pretty much everything available over the internet. It was also in the interactions that people had with these websites, though not available over the internet. Another specialty of this internet data was that it was unstructured data, that is not suitable for being represented in table column format. It followed no schema.

Google Search

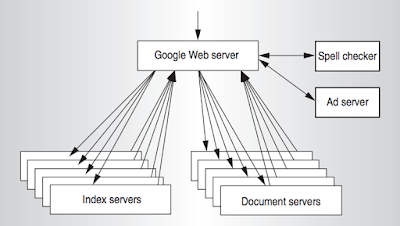

In Sep 1998, Google was incorporated as a company. They released their search engine to everyone that could be used freely at no cost. Though they were not the inventors of search engine concept, they revolutionized how the search was done over the internet. It had three broad components - Crawling, Indexing, and Retrieval. For a user to be able to perform a search Google does crawling and indexing upfront. Very briefly, Crawling process goes over all the pages on the Internet that are visible to Google, and stores them as documents in a document repository. Indexing process goes over these documents and creates an inverted index for all the words used in each document. This index is conceptually similar to the indexes that we see at the end of most of the books. These documents are ranked with many criteria like the popularity of document's website being one of them. When a user does a search, Google finds indexes matching with the search words, it gets the documents, and it sorts them based on their rank and relevance to the user. After following these steps, results are presents to the user. |

| Google Search Architecture. Image Source - Google |

Why is this important from Big Data point of view? The answer lies in the fact that Google created a unique system. This system could store the data from the entire visible Web, it had the ability to process that data and it could respond to user search queries in real time. While they did this, they made two things possible which were not seen earlier at such a large scale in any system:

- Google was primarily storing and processing unstructured data, the data that is not suitable for table column kind of storage provided by any RDBMS.

- The system was built using a cluster of low-cost PCs, making it economically viable.

- Low reliability of commodity hardware didn't matter. Their software was built to withstand hardware failures.

- Low performance of the hardware didn't matter. The performance was achieved through parallelization of computation processes.

Google subsequently published a series of research papers which provided useful insight into their system. Some important ones were:

- Google File System, Oct 2003

- MapReduce, Dec 2004

- Google Big Table, Nov 2006

Hadoop

In the year 2003, Doug Cutting was working on an open source Web Crawler, very much similar to what Google produced. Its name was Apache Nutch. It was not possible for him to store the huge number of web pages fetched from the Internet on a single machine. He needed a distributed system with multiple machines for file storage. During that time Google published a research paper on its Distributed File System called Google File System. He used the same approach to create Nutch Distributed File System which later became Hadoop Distributed File System.

Doug Cutting's next problem was indexing of files stored on the distributed file system. He needed a mechanism to run indexing algorithm on his cluster. Again Google, in 2004, published 'MapReduce' research paper. MapReduce was the perfect solution to this problem. He again borrowed the concept from Google and implemented it in Nutch.

MapReduce which was a parallel processing algorithm. It was a game-changing algorithm in terms of its approach to process the data:

- MapReduce shifted the data processing to the data nodes in the cluster right where the data resides. In this regard, it was remarkably different from MPP systems (previous post - Big Data Before The Internet), which could only query or fetch data in parallel. MapReduce parallelized even the data processing part and did away with the movement of data to data processing frameworks.

- One of the important challenges with Big Data is to connect the related information scattered around different locations in large datasets. MapReduce algorithm was built with the ability to extract, connect and aggregate the related data in a parallel fashion.

|

| MapReduce process for a text word count |

April 2006 Hadoop was released with HDFS (Hadoop Distributed File System) and Hadoop MapReduce. That meant the capabilities similar to what Google has developed came to open source world as an Apache project called Hadoop. It took no time for Hadoop to gain popularity after its release in the year 2006. Many big companies like Yahoo, Facebook, LinkedIn, eBay, IBM, Apple, Twitter, Amazon etc contributed to the codebase. Companies like Cloudera and Hortonworks were born to support organizations with Hadoop implementations. They are also among the most active contributors to Hadoop codebase. There were a lot of supporting open source projects built on top of Hadoop Platform like HBase (2008), ZooKeeper (2008), Pig (2009), Hive (2009), YARN (2011), Storm (2011), Spark (2012), Kafka (2012) and the list goes on. They are popularly known as products of Hadoop ecosystem.

Over the years Hadoop ecosystem has evolved tremendously and has become the perfect solution for storing and processing unstructured data at petabytes scale. Its maturity can be gauged from the fact that Yahoo was using 35000 Hadoop servers, with 16 clusters and 600 petabytes of data in the year 2016.

Unstructured Data

Ability to store and process unstructured data at petabyte scale has applications in Astronomy, Genome research, Environment, Medicine, Security, Disaster Management, Traffic Management and so many more. It also has applications in an enterprise environment. Useful analytics can be done on log files, click streams, chats, emails to draw useful incites into customer behavior, product demand, pricing, security etc.

Bill Inmon, regarded as the father of Data Warehousing by many, recognized the value of Big Data in his book 'Data Warehousing 2.0' published in 2009. He said "It comes as a surprise to many people that in most corporations the majority of data in the corporation is not repetitive transaction-based data. It is estimated that approximately 80% of the data in the corporation is unstructured textual data, not transaction based". The value hidden inside the unstructured data should be unlocked for a much more rich level of analytics.

In an enterprise, Hadoop can be used as a long-term storage for both structured data of a Data Warehouse and unstructured data collected from various other sources. Storing data in Hadoop is far cheaper than storing data in a Data Warehouse. With the improvements in Hadoop, the data inside Hadoop can be easily queried and analyzed. However, Hadoop is not yet an effective replacement for a Data Warehouse. The biggest reason is that Data Warehouse architecture ensures the integrity of data. The trust built over a long period of time on the accuracy of reports is something that cannot be matched by Hadoop and the process followed to gather and integrate the unstructured data. Moreover running queries on Hadoop takes relatively long time and Hadoop query interfaces are not as straightforward as running a SQL query on a Data Warehouse. So today, Hadoop compliments Data Warehouses by either pushing processed unstructured data into Data Warehouses for integrated analytics or by acting as an independent repository of unstructured data analytics.

| Unstrucured data in a PDF document. Image source: Cloudera blog. |

In an enterprise, Hadoop can be used as a long-term storage for both structured data of a Data Warehouse and unstructured data collected from various other sources. Storing data in Hadoop is far cheaper than storing data in a Data Warehouse. With the improvements in Hadoop, the data inside Hadoop can be easily queried and analyzed. However, Hadoop is not yet an effective replacement for a Data Warehouse. The biggest reason is that Data Warehouse architecture ensures the integrity of data. The trust built over a long period of time on the accuracy of reports is something that cannot be matched by Hadoop and the process followed to gather and integrate the unstructured data. Moreover running queries on Hadoop takes relatively long time and Hadoop query interfaces are not as straightforward as running a SQL query on a Data Warehouse. So today, Hadoop compliments Data Warehouses by either pushing processed unstructured data into Data Warehouses for integrated analytics or by acting as an independent repository of unstructured data analytics.

Big Data

| 3 Vs of Big Data |

In the first phase of big data, the biggest challenge was 'Volume' of data. With the arrival of the Internet, 'Unstructured Data' got added as one more dimension of Big Data. It is called 'Variety' of data. The Internet user load also brought a need for systems to quickly process the incoming data. Twitter and Facebook are some quick examples. This dimension of Big Data is called 'Velocity'. We haven't seen the full-blown 'Velocity' aspect yet in the field of analytics. Analytics today is largely batch-oriented ie it deals with old data collected over a period of time. This time can range from seconds to hours to days. The need for speed in analytics varies from system to system. There are systems like stock trading, security systems, life support systems, aerospace etc where systems need to analyze events and react in a few milliseconds. A delay of second is either a life-threatening event or a lost opportunity. I will write about the emerging architectures of Analytics and Internet of Things in my next post. Thanks for reading.

References

- We are the Web - Wired.com

- Our Story - Google.com

- How does Google search works? - Google

- Web search for a planet - Google

- The Google File System - Google

- MapReduce: Simplified Data Processing on Large Clusters - Google

- Bigtable: A Distributed Storage System for Structured Data - Google

- The history of Hadoop - Mark Bonaci

- Lucene : The good parts - Andrew Montalenti

- Apache Hadoop distribution archive

- Which Big Data Company has the World’s Biggest Hadoop Cluster?

- Cluster Sizes Reveal Hadoop Maturity Curve

- Fast and Furious: Big Data Analytics Meets Hadoop

- Big-Data Computing: Creating revolutionary breakthroughs in commerce, science, and society - Computing Community Consortium

- Evolution Of Business Intelligence - Bill Inmon

- Reality Check: Contributions to Apache Hadoop - Hortonworks

Extremely well Articulated Article!

ReplyDeleteAI is functioning as a data analyst to save millions by eliminating inaccuracies. AI tech is powered by machine learning algorithms that can learn over time and analyze a vast amount of data that has established itself in areas that require analytical and clear thinking. www.inetsoft.com

ReplyDeleteHarrah's Cherokee Casino & Hotel - MapYRO

ReplyDeleteFind https://vannienailor4166blog.blogspot.com/ your way around the casino, find where everything 출장안마 is located with the 1xbet login most jancasino up-to-date information about Harrah's Cherokee Casino & Hotel in Cherokee, febcasino.com NC.